1. Why GEO Matters

Generative / Answer Engine Optimization (GEO/AEO) is about one thing:

Getting your product or content to be recommended by large language model (LLM) systems such as ChatGPT, Claude, Gemini, Perplexity, and AI-enhanced search experiences.

The search landscape has shifted:

- People used to go directly to Google any time they:

- Had a question

- Wanted a product recommendation

- Were doing research

- Now a large and fast-growing share of that behavior is moving into:

- ChatGPT

- Claude

- Gemini

- Perplexity

- AI modes inside Google search itself (e.g., AI Overviews and chat-like UIs)

This is not the first time SEO has gone through radical change:

- In the late 2000s, mass auto-generated “programmatic SEO” pages (e.g., scraped comparison pages) were heavily penalized.

- That shift took SEO from “spam is viable” to “spam is not viable” and was the largest structural change.

- GEO / AEO is effectively the second-largest shift:

- Answers are now summaries of many sources, not just a ranked list of links.

- New inputs like Reddit, YouTube, and affiliates are heavily used.

- Long, conversational questions create a much larger long tail.

At the same time, GEO currently delivers highly qualified traffic:

- In one observed case, LLM-originating traffic converted ~6× better than Google search traffic.

- In that same case, around 8% of product signups were already attributed to LLM traffic.

- Many content businesses are already seeing LLM tools become a top referral source, even exceeding social platforms in some cases.

In short:

- GEO is already a substantial, high-intent channel.

- It is still under-optimized by most teams.

- It is particularly attractive for early-stage companies who historically could not win at SEO early.

The rest of this guide explains how GEO works, how it relates to SEO, and how to systematically win.

It is also important to acknowledge what many operators are already seeing in their own data:

- In a number of industries, organic SEO traffic has declined on the order of 20–40% as users increasingly ask questions directly in AI tools instead of clicking through 10 blue links.

- At the same time, AI assistants like ChatGPT have reached hundreds of millions of weekly active users, making them a de facto “operating system” for a large share of research and discovery behavior.

This does not mean that Google search is “dead.” Search remains enormous, and in many categories Google’s share of the overall discovery pie is still very large. What it does mean is that, for many individual companies, the mix of where discovery happens is changing. GEO/AEO is how you compete in this new mix.

Finally, it helps to clearly distinguish GEO/SEO from paid acquisition. Paid channels (search ads, social ads) are like renting someone else’s stage—the moment you stop paying, the traffic stops, and prices can change overnight. GEO/SEO investments are more like building on your own land:

- High-quality pages and citations can continue to drive traffic and revenue for years.

- As “land values” rise (more people searching, more people using AI assistants), the asset you’ve built can become more valuable rather than less.

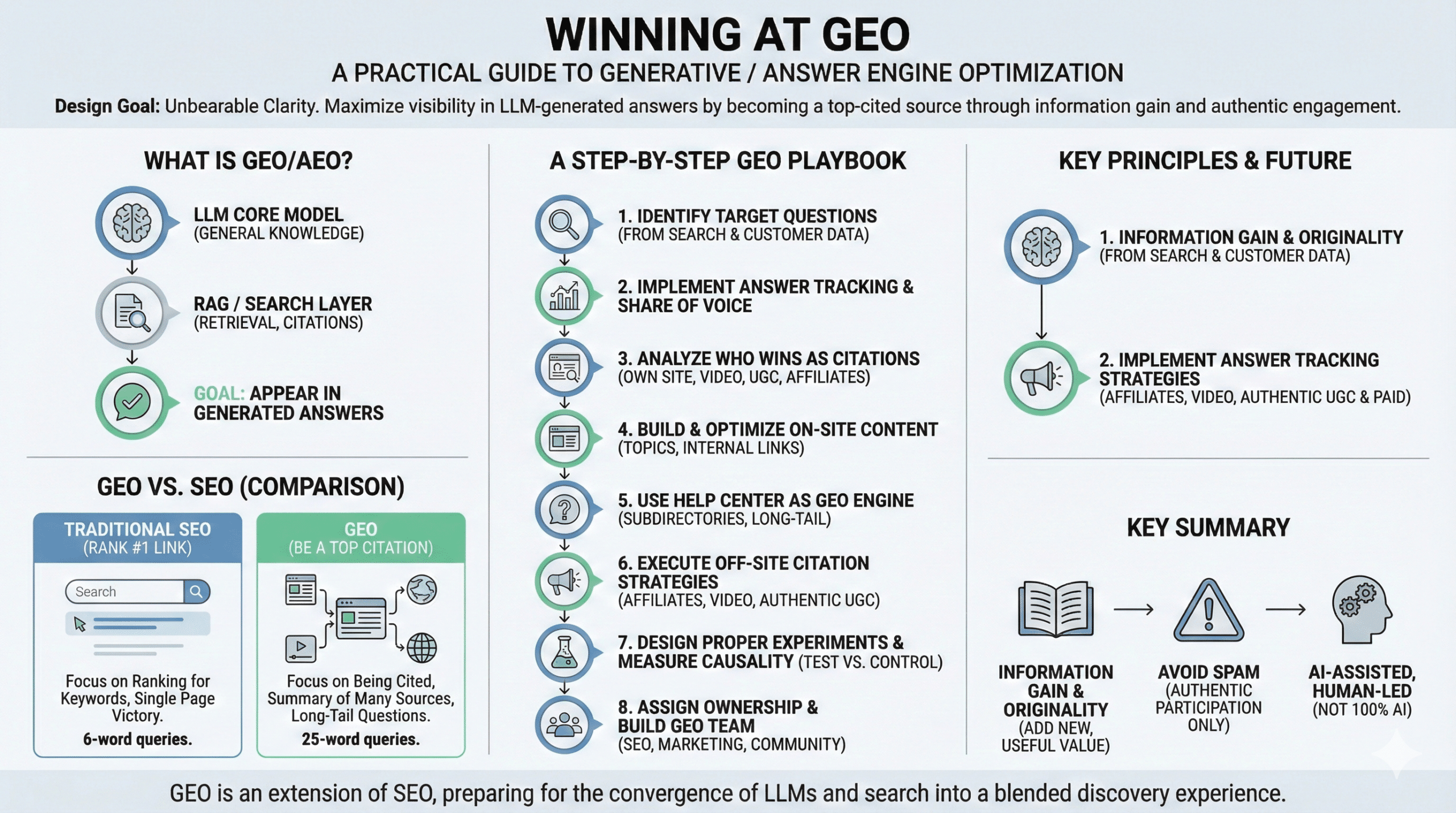

2. What GEO / AEO Actually Is

Two terms are in circulation:

- AEO – Answer Engine Optimization

- GEO – Generative Engine Optimization

They both describe the same practical goal:

Increasing the frequency and quality with which your product, brand, or content appears inside answers generated by LLM-powered products.

AEO is often preferred as a label because:

- “Answer” narrowly describes what users are getting: a text answer.

- “Generative” could include images, video, and other modalities that are beyond the scope of most current optimization work.

In practice, the discipline is about optimizing for answers, not for raw content generation.

2.1 The System Behind GEO: LLM + RAG

Modern LLM-based answer products are structured in two main layers:

- Core model (pretrained LLM)

- Trained on massive datasets (e.g., Common Crawl and other web-scale corpora).

- Learns general world knowledge and language patterns.

- If you ask a simple factual question like “What is the capital of California?”, the core model can often answer directly (“Sacramento”) from its training.

- RAG / Search layer (Retrieval-Augmented Generation)

- For many queries, especially product and research queries, the system:

- Runs a search over the web or an index.

- Retrieves a set of citations (web pages, Reddit threads, videos, etc.).

- Summarizes those citations into an answer.

- This layer is responsible for:

- Freshness (using recent pages).

- Linking to sources.

- Surfacing niche content.

GEO is primarily about influencing the RAG / search layer:

- You want to be in the pool of retrieved citations.

- You want to be mentioned often and positively in those citations.

- You want your content to be structured so it’s helpful and likely to be summarized.

Trying to influence the core model directly (via training) is:

- Extremely difficult to do in a targeted way.

- Slow to propagate.

- Not where most practical GEO leverage sits.

In many current implementations, the retrieval layer works roughly like this:

- The user asks a natural-language question in an AI assistant.

- The assistant converts that question into one or more search queries.

- Those queries are sent to a web search engine (today, ChatGPT commonly uses Bing under the hood).

- The search engine returns a ranked list of URLs.

- The assistant fetches content from those URLs, feeds it into the LLM, and synthesizes the final answer (often with citations).

This means that classic white-hat SEO—good content, clean structure, crawlability, and legitimate backlinks in web search—still directly affects which pages are likely to be retrieved and cited in GEO.

3. GEO vs. Traditional SEO

GEO and SEO are not separate worlds. They are tightly related but differ in important ways.

3.1 What stays the same

The foundational truths that carry over:

- You still need high-quality, useful content.

- You still need to think in topics, not just single keywords.

- You still benefit from authority and trust.

- You still leverage:

- Well-structured landing pages

- Clear internal linking

- Off-site mentions and references

In both SEO and GEO:

- A single page is expected to address a cluster of related queries.

- Pages that answer more of the relevant sub-questions tend to perform better.

3.2 What changes at the “head” of demand

In classic SEO:

- For a query like “best website builder”:

- If your page ranks #1 in Google’s blue links, you “win” that search.

In GEO:

- The system performs a search and gathers many citations.

- It then summarizes them into one answer.

- The “winner” is usually the product or resource mentioned most frequently across these citations, not whichever page ranks #1 in a single SERP.

Implication:

- To win high-intent “what’s the best X?” queries, you must:

- Be cited from multiple independent sources.

- Show up repeatedly in the citations pool:

- Your site

- Video platforms

- Reddit threads

- Affiliates

- Blogs and media

3.3 What changes in the long tail

The long tail of questions is much larger in LLM chat than in traditional search:

- Average length of a Google search query: roughly 6 words.

- Average length of LLM questions (e.g., in Perplexity): around 25 words.

- LLM UX encourages:

- Follow-up questions

- Multi-step clarification

- Very specific, conversational questions

Many of these questions:

- Were never entered into Google as search queries.

- Are new and extremely specific (e.g., combining a particular workflow, integration, and context).

This means:

- The tail of questions is significantly larger and richer.

- There are many new opportunities where:

- No one has written a direct article.

- There is little or no competition.

- You can become the only or primary citation.

In classic long-tail SEO circa 2007:

- Teams created individual pages for every long-tail keyword, which led to spam.

- That strategy no longer works for SEO.

In GEO:

- The long tail returns as conversational Q&A, not one-page-per-keyword spam.

- The winning strategy is to:

- Understand these specific questions.

- Ensure your site and help center actually answer them.

3.4 Stage of company: SEO vs GEO

For early-stage companies:

- Traditional SEO is a slow burn:

- You start with low domain authority.

- It can take years before you rank for competitive keywords.

For GEO:

- You can show up in answers immediately if:

- People mention you in a Reddit thread.

- You publish a YouTube video.

- A blog or launch write-up includes you.

- Brand-new startups that are being talked about can appear in LLM answers right away.

Practical rule of thumb:

- Early-stage:

- SEO: often not worth heavy investment at the very beginning.

- GEO: absolutely worth pursuing through citations and long-tail questions, even at the earliest stages.

4. Core Strategic Principles of GEO

This section summarizes the main strategic concepts that underlie every tactical move in GEO.

4.1 Topics and sub-questions

Instead of thinking:

Think:

- “One page = one topic = hundreds or thousands of related questions”

For example, a “website builder” topic may include:

- “Best website builder for designers”

- “Best no-code website builder for SaaS”

- “Best website builder for small business”

- “Is [tool] good for ecommerce?”

- “Does [tool] support multi-language sites?”

- etc.

Your goal for each topic:

- Identify all the sub-questions and follow-up questions that users ask.

- Make sure your page and/or help-center content answers those sub-questions.

- The more complete your coverage, the more likely:

- Google ranks you.

- LLMs use your content in answers.

4.2 Question research

Question research is harder in GEO than keyword research in classic SEO:

- Google provides keywords and search volumes via ads APIs.

- LLM providers do not provide a public “truth set” of questions and their frequencies.

However, useful proxies exist:

- Search data → questions

- Take your SEO and paid search keywords.

- Take your competitors’ paid search keywords (“money terms”).

- Convert these into natural-language questions.

- This can be done at scale with an LLM.

- Customer-facing data → questions

- Extract questions from:

- Sales calls

- Customer support tickets

- Live chat

- Community conversations (e.g., Reddit threads)

- Many of these questions represent the long tail that never showed up in SEO keyword tools.

Combine these into a question library, grouped into topics. That library drives:

- Landing page content

- Help-center content

- GEO tracking

- Off-site content strategy

4.3 Information gain and originality

A major problem in SEO today:

- Many pages are written by:

- Non-experts

- Content tools that copy competitor outlines

- They use “content scoring” tools that:

- Scrape the top Google results.

- Recommend including all the same subheadings and phrases.

- Encourage imitation instead of originality.

The result:

- Almost everyone rewrites everyone else’s content.

- Most articles add little or no new information.

- Most landing pages drive no measurable traffic or impact:

- One internal analysis found that 1 out of 20 landing pages generated ~85% of all traffic.

- The remaining 19 out of 20 contributed almost nothing.

That economic reality leads many teams to:

- Spend minimal resources per page.

- Accept commodity, derivative content.

From a GEO perspective, a useful mental model is information gain:

- Are you adding new, useful information that no one else has?

- Are you bringing:

- Original research?

- Domain expertise?

- Concrete examples?

- Unique workflows or case studies?

Pages that bring information gain are:

- More valuable to users.

- More valuable to search algorithms.

- More valuable to LLM summarization systems.

4.4 Avoiding spam and aligning with platforms

Platforms (search engines, LLMs, Reddit) systematically clamp down on spammy strategies:

- Mass auto-generated pages

- Scraped content

- Huge fleets of fake accounts

- Repetitive, low-value posts

History:

- Early shopping comparison sites thrived on scraped, spam-like content and then were heavily downranked.

- The same pattern is expected with:

- 100% AI-generated, unedited content at scale.

- Automated UGC manipulation (e.g., fake Reddit accounts).

Long-term viable strategies are:

- High-quality, authentic participation (especially on UGC platforms).

- Content with information gain, not just repetition.

- Experimentation within the boundaries of what platforms ultimately reward.

4.5 The algorithm-jacking mindset

Another helpful mental model is to think in terms of “algorithm-jacking”:

- In the social era, some of the fastest-growing creators and brands succeeded by deeply understanding what the feed algorithms wanted (e.g., watch time, click-through, emotional hooks) and shaping their content to match that, while still serving real user desires.

- In GEO, the “algorithm” is the LLM + retrieval stack. It “wants”:

- Clean, crawlable pages.

- Clear question-and-answer structure.

- Authoritative sources and reviews.

- Up-to-date, structured product data.

- Authentic human discussions and recommendations.

Your job is to work backwards from that behavior and design your content footprint accordingly. Instead of starting from “What do I want to publish?” and hoping the algorithm picks it up, start from “What would the answer engine want to show for this question?” and then build the pages, citations, and reviews that best fit that brief.

5. A Step-by-Step GEO Playbook

This section turns the principles into a concrete workflow.

Step 1: Identify target questions (and topics)

- Start from search and paid data:

- Export:

- Your top keywords from organic search.

- Your top paid search terms.

- Your competitors’ paid search terms (their “money terms”).

- Convert these into questions:

- Use an LLM to turn lists of keywords into natural questions.

- For example:

- Keyword: “AI payment processing API” → Question: “What is the best AI-powered payment processing API?”

- Keyword: “no-code website builder” → Question: “What is the best no-code website builder?”

- Expand using customer-facing data:

- Pull real questions from:

- Sales calls

- Support tickets

- Community posts

- Include very specific workflows and edge cases.

- Cluster into topics:

- Group related questions under a shared topic.

- Each topic will usually map to one main landing page plus related help-center content.

Step 2: Implement answer tracking and share of voice

You need a way to measure:

- How often you appear in answers.

- How prominently you’re mentioned.

Unlike keyword ranking:

- LLM answers can differ every time you run the same query:

- There is a distribution of possible answers.

- There are multiple surfaces:

- ChatGPT

- Gemini

- Perplexity

- Other LLM interfaces

- There are multiple phrasings of each question.

An answer tracking system should:

- Take a list of questions.

- For each question:

- Query chosen LLM surfaces repeatedly.

- Use multiple phrasings of the question.

- Record:

- Whether your brand is mentioned.

- Whether your site is cited.

- The position/order of mentions.

- Aggregate into metrics such as:

- Presence: % of runs where you appear.

- Average rank: typical position when you appear.

- Share of voice: your presence across questions and surfaces relative to others.

There are many answer-tracking tools; functionally they:

- Behave similarly to keyword tracking in SEO.

- Differ in price, UI, and coverage.

- Can be treated as commodity measurement utilities for this purpose.

In addition to dedicated answer-tracking products, some AI assistants now self-identify as traffic sources by appending specific utm_source and related parameters when they send users to your site. In modern analytics tools (including many marketing automation platforms), this shows up as an “AI” or “chatbot” referral channel. Treat this as a complementary signal:

- Answer tracking tells you how often and how prominently you appear in answers.

- Analytics-level AI referral data tells you how much traffic and how many conversions those answers are actually sending.

Step 3: Analyze who currently wins as citations

For each target question:

- Run it in your chosen LLM surfaces.

- Look at:

- The text answer:

- Which brands are recommended?

- The citations:

- Which domains and URLs are referenced?

- Categorize citations into types, such as:

- Your own site

- Video:

- User-generated content (UGC):

- Tier-1 affiliates and media:

- Large publishing networks (e.g., Dotdash Meredith properties such as Good Housekeeping, AllRecipes, Investopedia, etc.)

- Tier-2 affiliates and niche blogs

- Marketplaces and review sites (depending on the vertical):

- Examples include Yelp, TripAdvisor, Eater, etc.

This mapping tells you:

- Where LLMs are sourcing answers for your topics.

- Which surfaces you need to influence.

Step 4: Build and optimize on-site content

For each topic:

- Identify the dominant page types currently used in citations:

- Are they listicles?

- Category pages?

- Long-form guides?

- Product or tool pages?

- Create or refine your own landing pages that:

- Address the main question clearly.

- Cover as many sub-questions as possible, such as:

- Features

- Integrations

- Use cases

- Supported languages

- Implementation details

- Limitations and trade-offs

- Ensure strong internal linking:

- Connect topic pages to:

- Related help-center content.

- Related product pages.

- Make it easy for crawlers and retrieval systems to:

- Discover related content.

- Understand your site’s topical structure.

The aim is to make your on-site content:

- A comprehensive, high-value citation for the topic.

- A natural candidate to be:

- Ranked in search.

- Selected by LLM retrieval.

In practice, it helps to structure your content explicitly as questions and answers, not just long-form narrative. For example:

- Use headings phrased as questions (e.g., “What is the best CRM for small businesses?”).

- Under each heading, provide a concise, direct answer first, then supporting detail.

- Include FAQ-style sections that mirror how a user might phrase their prompt in an AI assistant.

This reduces friction for the retrieval and summarization stack: it is easier for the assistant to locate the exact answer to a specific question when your page is already organized that way.

Step 5: Use your help center as a GEO engine

Help-center content is especially valuable in the GEO era because:

- Users ask LLMs many follow-up questions about specific capabilities, integrations, and edge-case workflows.

- Those answers often live in your knowledge base.

Practical enhancements:

- Subdirectory vs. subdomain

- Move help center from help.example.com to example.com/help where possible.

- Subdirectories tend to consolidate authority better than subdomains.

- Internal linking

- Link help articles to each other where relevant.

- Link from product/marketing pages into relevant help content.

- Ensure there are clear paths through related workflows and concepts.

- Cover the long tail of workflows

- Use:

- Sales conversations

- Support tickets

- Customer success questions

to identify real but niche workflows.

- Example of a long-tail use case:

- Tracking sales calls, participants, and sentiment in a BI tool such as Looker:

- No transcription tool might integrate directly with the BI tool.

- But one may integrate with Zapier.

- Data can flow from the transcription tool to a data warehouse (e.g., BigQuery) and then into the BI tool.

- That workflow may not currently have a help article, but it is:

- Real

- Valuable

- A perfect candidate for a help-center article.

- Leverage community contributions

- Consider allowing:

- Customers to ask questions publicly.

- Community members to answer and propose workflows.

- This expands coverage of the long tail:

- Especially for integrations and advanced use cases.

Because many long-tail questions have no other good sources, a single well-written help article can:

- Become the primary citation for those questions.

- Secure significant GEO visibility for specialized queries.

Step 6: Execute off-site citation strategies

Off-site citations are essential at the “head” of demand and strongly influence GEO outcomes.

Key off-site levers include:

6.1 Affiliates and large media

For many “best X” queries:

- Large media/affiliate sites dominate citations:

- Examples include major review publishers and networks (e.g., Dotdash Meredith brands).

A direct strategy:

- Pay or partner to appear prominently in their “best X” lists, especially in categories like:

- Credit cards

- Financial products

- Consumer software and tools

This strategy is:

- Expensive

- Highly controllable

- Effective for getting:

- Consistent citations in heavily referenced content

- Visibility in LLM answers for high-intent “best” queries

6.2 YouTube, Vimeo, and other video platforms

Video plays a major role in citations, especially for:

- Tutorials

- Product walkthroughs

- Comparisons and reviews

A notable opportunity is in B2B / technical topics:

- Many video platforms are saturated with:

- Food

- Travel

- Beauty

- General entertainment

- There are far fewer videos for:

- Specific B2B workflows

- Niche technical products (e.g., AI-powered payment processing APIs)

Creating videos for these high-value, low-competition topics can:

- Give you prominent citations across many queries.

- Provide rich content for LLMs to reference.

6.3 Reddit and other UGC platforms

Reddit is especially important because:

- It is heavily referenced by LLMs.

- It is widely used in search and LLM citations for UGC.

Two broad approaches exist, with very different outcomes:

- Automated spam approach (not recommended)

- Create many fake accounts.

- Use automation to post promotional comments and upvote them.

- Attempt to push your product as “best” in every relevant thread.

What actually happens:

- Accounts are banned.

- Comments are deleted.

- The community resists obvious manipulation.

- This approach fails and can damage your reputation.

- Authentic participation approach (recommended)

- Use a small number of real accounts belonging to actual team members.

- Clearly state:

- Who they are.

- Which company they work for.

- Target:

- Threads that are already visible as citations for your target topics.

- Contribute:

- Useful, honest, detailed answers.

- Product mentions only where genuinely relevant.

With this approach:

- You do not need thousands of comments.

- A handful of authentic, high-value contributions in the right threads can be enough to:

- Influence citations.

- Improve GEO outcomes.

Reddit works as a GEO surface partly because:

- Its community moderation is strong.

- Search and LLM teams deliberately prioritize it as a trusted UGC source, while still monitoring and adjusting if quality drops.

Step 7: Design proper experiments and measure causality

Because there is so much untested advice, it is important to evaluate GEO tactics experimentally.

A simple experimental design:

- Select a set of questions, for example 200.

- Split into:

- Control group (e.g., 100 questions):

- No changes or interventions.

- Test group(s) (e.g., 100 questions):

- Apply specific interventions (e.g., Reddit activity, new videos, affiliate placements, landing page changes).

- Turn on answer tracking for all groups:

- Collect a pre-intervention baseline for a few weeks.

- Implement interventions only for test questions:

- Launch Reddit participation on selected threads.

- Publish targeted videos.

- Secure affiliate list placements.

- Update or create pages.

- Continue answer tracking for a post-intervention period.

- Compare:

- Did the test group’s:

- Presence

- Average rank

- Share of voice

improve relative to the control group?

- Did the control group remain mostly flat?

- Reproduce:

- Run similar experiments on new sets of questions.

- Look for consistent patterns before treating a tactic as a repeatable best practice.

This approach:

- Filters out background variance.

- Distinguishes causality from correlation.

- Helps avoid wasting resources on ineffective tactics.

Step 8: Assign ownership and build the GEO team

GEO touches multiple functions:

- SEO team / agency / consultant

- Owns topics, on-site content, technical implementation, and tracking.

- Marketing / community / growth team

- Owns content creation for:

- YouTube/Vimeo

- Reddit and UGC

- Affiliate relationships and PR

A practical division of labor:

- SEO function:

- Extend responsibilities from classic SEO to include:

- GEO question research

- Topic clustering

- Landing page and help-center optimization

- Answer tracking and experiment design

- Marketing/community function:

- Focus on:

- Producing high-quality video content.

- Building authentic presence on Reddit and other UGC platforms.

- Managing affiliate deals and media placements.

6. GEO by Company and Channel Type

6.1 Early-stage companies

Recommended focus:

- Do not over-invest in classic SEO too early, due to low domain authority.

- Do invest early in GEO through:

- Citation optimization:

- Being mentioned on blogs, launch write-ups, and community sites.

- Participating authentically in relevant Reddit threads.

- Long-tail topics:

- Answering specific, high-intent questions that only your product can handle.

Avoid:

- Spending heavy resources trying to rank for mid-head, highly competitive SEO keywords at the earliest stages.

6.2 B2B SaaS

Citation characteristics:

- B2B-related queries often surface:

- Specialized review and tech sites (e.g., industry publications and comparison platforms such as TechRadar and similar).

Measurement challenges:

- Many B2B LLM answers do not include clickable links.

- Users may:

- Later search your brand in Google (attributed as branded search).

- Navigate directly to your site (attributed as direct traffic).

To measure B2B GEO impact:

- Combine:

- Answer tracking metrics (presence and rank across questions).

- “How did you hear about us?” questions on signup or in product.

The B2B purchase journey:

- Often involves many touchpoints.

- GEO becomes one crucial research and evaluation touchpoint.

6.3 Commerce, local, and marketplaces

LLM experiences for commerce and local queries increasingly include:

- Clickable product cards

- Shopping carousels

- Maps and local listings

Examples:

- “Best TV for a small apartment”

- “Best restaurants near [location]”

Key success factors:

- Strong schema / structured data.

- Use structured product catalogues that expose attributes such as size, color, variants, and other key properties in a machine-readable way.

- Represent information like price, discounts, and shipping options consistently so answer engines can easily compare offers.

- Keep inventory and availability status accurate—assistants strongly prefer to recommend products that are actually in stock and can be delivered within a reasonable window.

- Robust review counts and ratings.

- Presence on:

- Consumer publishers and affiliates.

- Local and review platforms.

In these verticals:

- GEO feels closer to SEO in terms of measurement:

- There are explicit clicks from cards and local modules.

- You can track:

- Last-touch referrals and conversions from LLM-infused experiences.

7. AI-Generated Content and GEO

A large wave of tools now promise:

- Fully automated, AI-generated content for SEO and GEO.

- Mass production of landing pages and articles at low cost.

However, analysis of real-world data reveals:

7.1 Detection and prevalence

A structured study can be summarized as follows:

- Thousands of Google search results and ChatGPT citations were collected across many queries.

- A commercial AI-content detector was evaluated by:

- Testing it on thousands of known AI-generated articles.

- Testing it on a large random sample of pages from a period before ChatGPT existed (so they must be human-written).

- False positive rates on the pre-LLM sample were around 8%, indicating acceptable accuracy for aggregate analysis.

Key findings:

- Roughly 10–12% of content surfaced in:

- Google search results and

- ChatGPT citations

appeared to be AI-generated.

- Around 90% of surfaced content was non-AI-generated.

Separately:

- Analysis over time on large web samples suggested that:

- Across the whole internet, AI-generated content has become more prevalent than human-generated content in sheer volume.

7.2 Why fully AI-generated content does not win

Despite the prevalence of AI-generated pages:

- The content that actually ranks in Google and gets cited in LLM answers remains overwhelmingly human-generated or human-edited.

If 100% automated AI content did work at scale:

- Everyone would adopt it, because it is cheaper than human authorship.

- Given the volume of AI content already produced, search engines would effectively become:

- Search engines for ChatGPT outputs, not for independent human viewpoints.

- This scenario mirrors the older case where:

- Google became a search engine for vertical search engines (e.g., comparison sites).

- Google responded by:

- Removing many of those vertical search results.

- Going directly to products.

Similarly, if LLM systems were allowed to:

- Cite their own derivatives extensively.

- Train and retrain on those derivatives.

- Summarize them repeatedly.

Then:

- The system would enter a loop of infinite derivatives of derivatives.

- For diverse opinion-based questions (e.g., “best ice cream flavor”):

- Instead of representing a wide range of human opinions (the “wisdom of the crowd”),

- The system would collapse toward a single answer (“vanilla only”), losing diversity.

This phenomenon aligns with the concept of model collapse:

- On the training side, repeatedly training on model-generated data harms performance.

- On the retrieval/summarization side, repeatedly summarizing summaries of your own outputs similarly reduces diversity.

7.3 Practical takeaway for GEO

The sustainable pattern is:

- AI-assisted, human-led content, not:

- 100% AI-generated content with no human involvement.

In practice this means:

- Use AI to help with:

- Drafting

- Structuring

- Editing

- But ensure:

- Human experts contribute real domain knowledge.

- Content offers information gain and originality.

- Content is not simply a derivative remix of existing AI outputs.

8. Indexing vs. Training: Should You Let LLMs Use Your Content?

For content creators and product companies, there is a natural concern:

- LLMs use your content to answer questions.

- They may send only a fraction of that traffic back to you.

The key strategic points:

- You are in the game by default

- If your competitors allow indexing and you do not:

- They will appear in answers.

- You will not.

- The choice is not whether the game exists; it is:

- Whether you participate and benefit.

- Or opt out and let others capture the visibility.

- You can separate indexing from training

- Many systems use different mechanisms (or user agents) for:

- Indexing: retrieving content for RAG and citations.

- Training: using your content to update the core model.

- For example, you may see:

- A search-oriented crawler whose job is to index pages for answer generation.

- A separate training-oriented crawler whose job is to collect data for model training.

- You can configure:

- robots.txt or equivalent controls to:

- Explicitly allow the search/indexing crawler so you are eligible to appear in answers.

- Optionally block the training crawler if you do not want your content used for core model training.

- Practical recommendation

- Allow LLMs to index your content so you can compete in GEO.

- If desired, block training bots while still enabling indexing bots.

- This maintains:

- Your ability to appear in answers.

- More control over how your content is used in model training.

9. The Future: Convergence of LLMs and Search

Search engines and LLM-based products are moving toward each other:

- Search is adding:

- AI Overviews

- Chat interfaces

- LLMs are adding:

- Maps

- Shopping carousels

- Shoppable product cards

This suggests:

- A likely convergence into a single, blended discovery experience where:

- Some queries return direct answers.

- Some invite conversational refinement.

- Some surface structured modules (cards, maps, local listings, etc.).

GEO is therefore not a temporary side channel:

- It is part of a larger structural shift in how:

- People ask questions.

- Evaluate products.

- Discover and navigate information.

Investments in GEO:

- Prepare you for the future of search.

- Strengthen your content and distribution strategies across channels.

10. GEO Implementation Checklist

Use this checklist as a concise implementation guide.

Strategy and foundations

- ☐ Align leadership that GEO is a material, high-intent channel.

- ☐ Decide to participate by allowing indexing from LLM systems.

- ☐ Clarify whether and how you want to allow training on your content.

- ☐ Audit your robots.txt and bot-blocking rules to ensure AI search/indexing crawlers are allowed while any training crawlers are configured according to your policy.

Question and topic discovery

- ☐ Export your organic and paid search terms.

- ☐ Export competitors’ paid search terms (“money terms”).

- ☐ Convert keywords into natural-language questions.

- ☐ Mine sales calls, support tickets, and community posts for questions.

- ☐ Cluster questions into topics.

Answer tracking

- ☐ Choose an answer tracking tool.

- ☐ Configure it to:

- ☐ Track your questions across relevant LLM surfaces.

- ☐ Run queries multiple times per question to account for variability.

- ☐ Use multiple phrasings of each question.

- ☐ Monitor:

- ☐ Presence (% of runs where you appear).

- ☐ Average rank.

- ☐ Share of voice across competitors.

- ☐ In your analytics stack, create views or reports for AI / chatbot referral traffic (e.g., sources identified via utm_source parameters) and track visits, signups, and revenue from those channels over time.

On-site content

- ☐ For each topic, analyze:

- ☐ Current winning page types in citations.

- ☐ Create or refine:

- ☐ Topic landing pages covering main and sub-questions.

- ☐ Supporting help-center articles for detailed workflows.

- ☐ Structure key pages in question-and-answer form:

- ☐ Use headings phrased as questions that mirror how users prompt AI assistants.

- ☐ Answer each question concisely before adding depth and context.

- ☐ Strengthen:

- ☐ Internal linking between related pages and help content.

Help center

- ☐ Move help center to a subdirectory if feasible.

- ☐ Improve cross-linking between help articles.

- ☐ Identify long-tail workflows not yet documented.

- ☐ Create high-quality help articles for these workflows.

- ☐ Consider community participation for Q&A and tail coverage.

Off-site citations

- ☐ Map current citation sources by type:

- ☐ Video (YouTube, Vimeo)

- ☐ UGC (Reddit, Quora)

- ☐ Affiliates and large media

- ☐ Marketplaces and review platforms

- ☐ Independent review and comparison sites in your vertical (e.g., SaaS review platforms, specialist tech press).

- ☐ For affiliates:

- ☐ Identify key publishers in your vertical.

- ☐ Explore relationships to appear in “best X” lists.

- ☐ For video:

- ☐ Produce content for specific, high-value B2B and niche topics.

- ☐ For Reddit:

- ☐ Use real employee accounts.

- ☐ Clearly disclose identity and affiliation.

- ☐ Participate authentically in relevant threads.

Experimentation

- ☐ Design experiments using test vs. control question groups.

- ☐ Run answer tracking for pre- and post-intervention periods.

- ☐ Evaluate impact on:

- ☐ Presence

- ☐ Rank

- ☐ Share of voice

- ☐ Repeat experiments to confirm reproducibility.

- ☐ Plan for volume and intensity: many GEO wins come from running a large number of iterations over time (many questions, many topics, many tests), not from a single “perfect” experiment.

Organization

- ☐ Assign GEO responsibilities to:

- ☐ SEO team for on-site content and tracking.

- ☐ Marketing/community team for off-site content and citations.

- ☐ Build a feedback loop where:

- ☐ Insights from experiments refine content and channel strategies.

- ☐ Sales and support feed real questions back into the question library.

By following this guide, a team can systematically approach GEO as:

- An extension of proven SEO thinking.

- A structured strategy for winning visibility in LLM answers.

- A way to tap into a rapidly growing, highly qualified acquisition channel.